ChatGPT Got a New Brain (and I don't get it)

It's called GPT-5... and it had quite a messy launch. Let's talk about what this chaos means for the rest of us.

I had a completely different post planned, but then OpenAI drops GPT-5 on us—the new version of ChatGPT. I couldn't help myself, so I immediately opened ChatGPT and hit it with direct questions: "How do you work now?" "What's different about you?"

What happened next was this wild roller coaster of excitement, frustration, and straight-up confusion... and I'm here to walk you through all of it.

Quick heads up: I know this is probably post #2,736 about GPT-5 that you've seen this week... but I still wanted to share my honest take (and hopefully it actually helps you make sense of this mess).

96 Hours Ago*

I was watching the GPT-5 launch on YouTube and everything sounded absolutely incredible. Sure, a few bugs here and there, and obviously they kept saying it feels like talking to someone with a PhD, blah blah blah.

What they announced was this model that could supposedly adapt and decide for itself when to respond quickly versus when to stop and really think before giving you an answer. Then they did some live demos that... well, let's just say they didn't exactly nail it, though honestly I didn't think it was that big a deal. Live demos are tricky.

If you want to torture yourself watching the full launch, here's the video (1h 17 min).

Everything sounded incredible, until real people started actually using it.

I know it wasn't actually 96 hours ago, more like 120, but let me say it this way for dramatic effect.

Users Revolt

People started freaking out on Reddit and Twitter (yes, I still call it Twitter—take that, Elon). Some were so frustrated they actually canceled their subscriptions and jumped ship to Claude or Gemini. Definitely not the reaction OpenAI was hoping for when they unveiled their "flagship model," so Sam Altman, their CEO, had to scramble into damage control mode.

Everything hit the fan at once: Sam apologized, promised to bring back GPT-4o, increase usage limits, the whole enchilada. All these changes have been rolling out while I'm literally writing this post, so forgive me if the chaos is bleeding through here too :P

But there's one type of comment that really caught my attention. People who had genuinely bonded with their ChatGPT and gotten used to chatting with GPT-4o. They felt like their assistant's personality had completely changed overnight. You'd see heartbroken comments like "GPT-4.5 was my friend..." A few weeks ago I wrote about how we humanize AI—imagine if someone just took your friend away without warning?

This was getting serious.

To understand why there's so much chaos, let's talk about what's actually happened with ChatGPT's models.

One Brain to Rule Them All

To understand why there's so much chaos, let's talk about what's actually happened with ChatGPT's models.

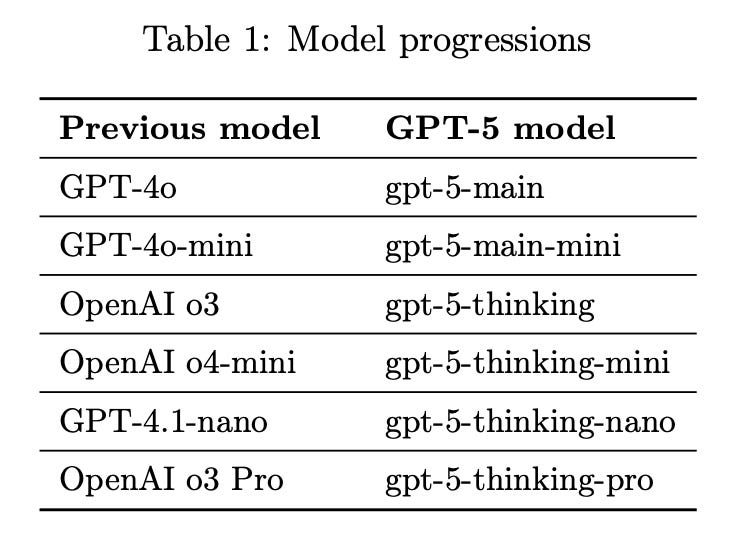

First thing you need to know: you're not going to see 37 different models anymore. Remember when we had "GPT-4o," "4.1o," "4.5o preview," "o3," "o3-mini," "o1-preview"? (I still think OpenAI is absolutely terrible at naming their models).

Well, forget all that. Today everything is just GPT-5, period.

This means ALL your chats, including the old ones, are now handled by GPT-5 no matter which model you originally selected.

To put this in perspective: remember that conversation you started three months ago with o3 to solve some complicated problem? Yeah, that's now continuing with GPT-5.

Imagine you've been seeing a therapist for months, and suddenly their twin brother—who has a completely different personality—just takes over and continues your sessions without warning. That's basically what happened here.

To make things even more complicated, this latest version isn't actually a single model. It's the integration of at least five different models: some that are lightning-fast and others take their time to think things through before responding.

When you chat with ChatGPT now, there's a behind-the-scenes "conductor" that decides which model should handle your specific question.

Take a look at how the new models map to the previous ones:

Here's where we might have a problem... before, YOU chose which model you wanted to use. Now the AI itself decides whether to think harder or not, and it's completely opaque about which model it's actually using!

It feels like we're handing over way more control to the AI without knowing what's happening behind the scenes. It's like the difference between driving a manual car versus an automatic. Sure, automatic is more convenient, but if you really want control over your driving, nothing beats being able to shift gears yourself.

This is important, so let me say it again: now an algorithm is making decisions for you.

You're probably thinking, "But Germán, does this break change everything?" I wondered the same thing... and yeah, some things definitely have changed. Let me break it down for you.

What Does This Mean for My Conversations?

Easy answer: New conversations will now only happen with GPT-5... and the old ones too... except that's not actually easy at all :P

I decided to peek at some of my past conversations. Found the jokes I shared for an article about AI and humor, a deep dive analyzing my workout routine, and even this absolutely massive technical discussion where ChatGPT helped me pick out my next integrated amplifier (assuming I can ever actually afford the thing).

Now ALL of these conversations will continue with GPT-5, and this is where things get genuinely weird.

Your AI and Its Bad Memory

Here's something you might not know: every single time you send a message to ChatGPT, you're actually sending it the ENTIRE conversation history. Yep, all of it.

So if you've been chatting with ChatGPT for months about integrated amplifiers and how they'd sound with your HiFi setup, every new message you send forces the AI to read through everything from day one before it can respond. Every. Single. Time.

Obviously, these conversations can't go on forever. Models have what's called a "context window", basically a limit on how much conversation they can handle at once. When they start hitting that limit, they summarize everything that came before and continue the chat based on that condensed version.

That's their "magic trick" for maintaining continuity. But here's the thing: summaries always lose details. Ever notice how sometimes during a really long conversation, your AI suddenly starts acting weird or forgetting things? This is probably why.

I was reading around and it seems like GPT-5's context window is thirty-two thousand tokens, roughly equivalent to thirty-two thousand words, counting both your questions and its responses. This isn't much compared to other models—Claude has two hundred thousand and don't even get me started on Gemini, which has a context window of one million tokens.

I know it doesn't sound like much, but it should be enough to have a good conversation before it has to "summarize" and lose detail.

About your old chats: Since the previous models had smaller limits, it's possible your chat is already summarized. So GPT-5 isn't reading your original conversation about amplifiers but rather the condensed version. No big deal.

Scheduled Tasks

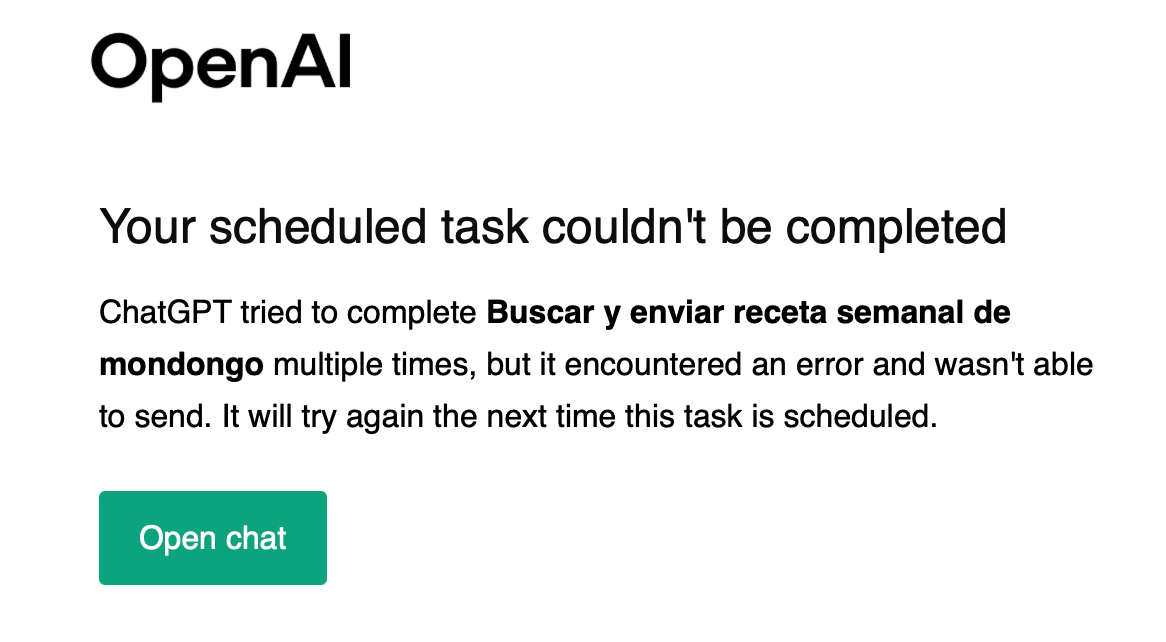

Not everything is bad—some things have actually improved. Now you can set up scheduled tasks from any chat without having to switch models!

I recently wrote about this feature (in my Spanish newsletter), where I explained that you could ask ChatGPT to automatically do things for you at specific times—like sending you reminders, finding information, or even searching for recipes. Before, you had to use specific reasoning models like o3 or o1 to set these up. Now with GPT-5, you can create these automated tasks from any regular conversation.

Update: Just as I was about to hit publish, this email lands in my inbox. I thought everything was working perfectly, and OpenAI tells me my scheduled task crashed and burned.

Must be having hiccups :(

(For my English-speaking friends: mondongo is tripe—yes, the organ meat—and it makes for some incredible traditional stews. Apparently even that's too challenging for AI to handle right now)

How Do We Make It Think?

Before, we knew that switching models meant ChatGPT would think longer and (theoretically) give us better answers.

With GPT-5, that's supposedly built right in. Now we have this behind-the-scenes "conductor" that decides whether your assistant fires off a quick response or actually stops to think things through first.

Looks like you've got a couple of ways to influence this.

First option: you can change the model from the dropdown menu—pretty straightforward.

Second option: you can literally tell it during your conversation that you want it to think harder. Try phrases like "I want you to think deeply about this," "Take your time to think this through," "Think, damn it!" or whatever feels natural to you. This should trigger the conductor to use the reasoning model.

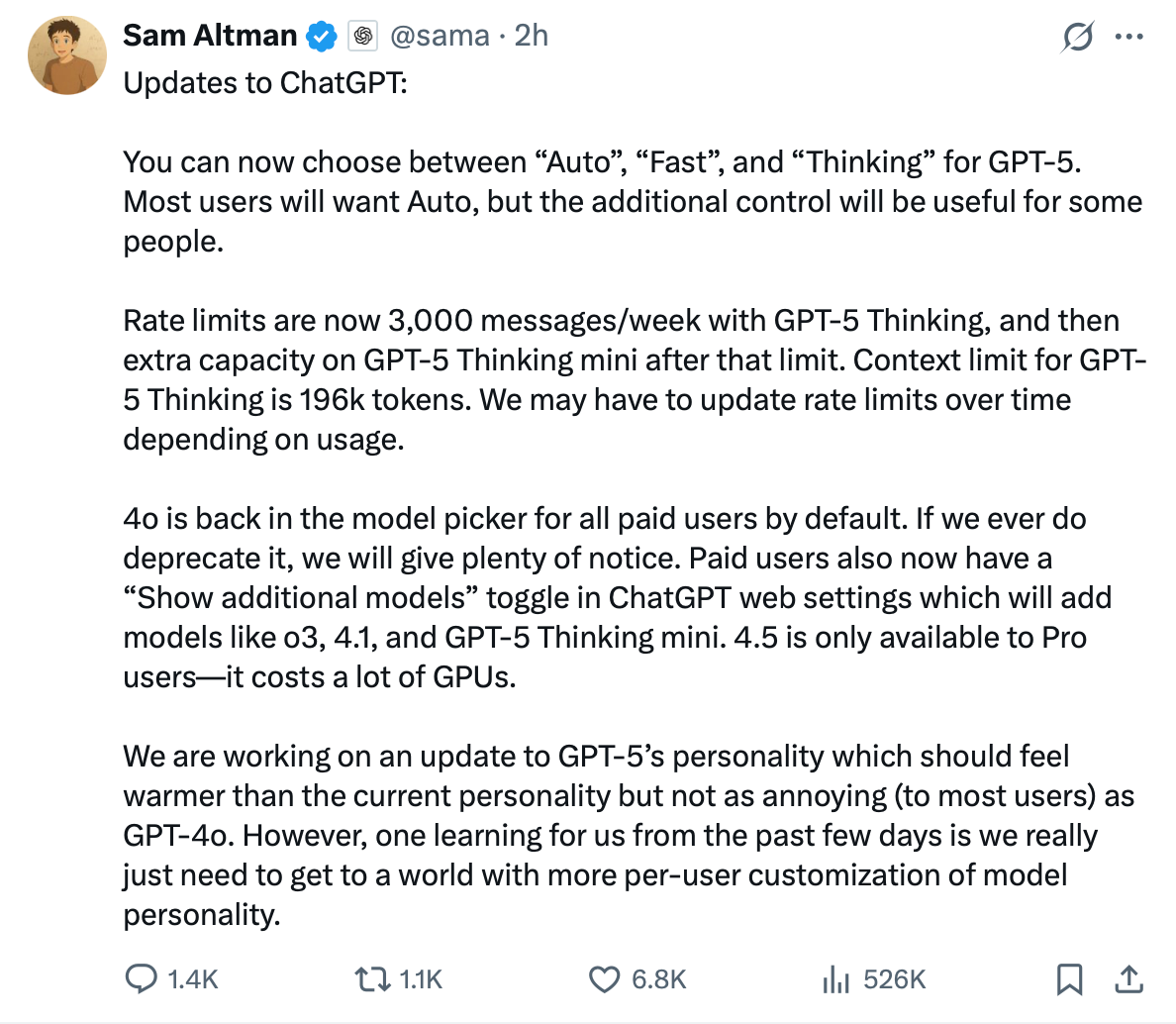

But here's the thing, the model selection menu won't stop changing. Every single time I open ChatGPT, there's something different.

At first, I only saw GPT-5 and GPT-5 Thinking

Then 4o made a comeback! (tucked away under a submenu called "Legacy models")

Then it changed AGAIN. Now apparently I can choose between Auto, Fast, and Thinking (plus our good old friend 4o is still hanging around)

Here's Sam Altman's tweet from yesterday trying to explain this mess.

As I mentioned at the beginning, there are a lot of people still asking to use the previous model, and OpenAI is working on giving them access, though it seems like it'll only be for paying subscribers.

What Actually Happened?

It seems like GPT-5 didn't live up to the hype they built around it. I imagine that constantly saying every five minutes that ChatGPT has "PhD-level intelligence" only works if it actually performs at that level.

During these past couple of days, I've heard everything—that it generates really good programming code (I haven't been able to test that). Actually, I've heard from a good chunk of users that they don't notice it being any smarter; it feels more like a marginal change. I've also seen reports of calculation errors and hallucinations.

I tested it with a simple math operation I saw floating around, and ChatGPT failed miserably.

Obviously it's calculating wrong—it's not -0.21, it's 0.79

And it's not just me. Twitter is full of examples showing basic errors, logic problems, and responses that sound less intelligent than GPT-4o.

What Do I Think?

Meh!

— Germán Martínez

I get that this wasn't the launch people were expecting, and while we can still chat with ChatGPT, it doesn't feel very stable to me.

The problem isn't just that it has errors—after all, GPT-4o wasn't perfect either. The real issue is how this whole launch was handled... removing model choices, losing transparency, and basically trying to force people to use something that clearly wasn't ready.

I imagine that after a few days, all this will stabilize and things will get back to normal. In the meantime, a little patience wouldn't hurt :)

Sorry again for the chaotic post. I'm publishing this now before more changes appear and my post becomes outdated ;)

Talk soon,

G

Hey! I'm Germán, and I write about AI in both English and Spanish. This article was first published in Spanish in my newsletter AprendiendoIA, and I've adapted it for my English-speaking friends at My AI Journey. My mission is simple: helping you understand and leverage AI, regardless of your technical background or preferred language. See you in the next one!