You uploaded a document to ChatGPT... now what?

I dove into the technical world of RAG (and I'll explain it with grandma's recipes... seriously)

I'm sure you've already tried uploading your PDFs, an Excel file, or that 478-slide presentation your boss asked for yesterday.

If we believe your favorite AI company's marketing department, your assistant should "read" all your documents and answer any question perfectly, like a PhD student...

I've uploaded everything too—from my HiFi speakers user’s manual to several chess books, massive business documents full of charts and tables, and even some of my posts in PDF format, just for fun ;)

I’d tell you it always works, but... it doesn't. Sometimes it fails, and today I want to talk about that.

But before we start, did you know that this whole process—where AI "reads" the documents you upload and responds—has a proper name in the artificial intelligence world?

It's called RAG, a short (and cool) way of saying Retrieval-Augmented Generation. In plain English, it's basically "giving answers with found information."

And well, all those (overly exaggerated) promises that marketing team sold you have to do with how RAG works.

I want to tell you what I discovered (with a twist): I'm going to try explaining it with a story about grandmas and recipes.

What does AI do with your document?

Does the AI read every word? Does it remember everything? Does it make a mental summary or just grab bits from here and there?

Imagine an AI machine powered by grandma's recipes

I've been talking with my mamama for years to get her recipes—including the Christmas turkey (stuffed, of course), seco con frejoles (a traditional Peruvian long-leaf coriander stew), cau cau (a Peruvian tripe stew), and those tamalitos verdes (green tamales) that take forever to prepare but are worth their weight in gold.

To make this more fun, imagine that instead of using ChatGPT, I have an AI machine where I want to put all that wisdom and seasoning so I can have my mamama's recipe collection always at hand.

Note: "Mamama" is what I've always called my grandmother—it's my special name for her, and switching to "grandma" just doesn't feel right.

What really happens when you upload a document?

Here's where the culinary chaos begins.

OK, so I have ChatGPT the machine and the first thing I need to do is upload all the recipes.

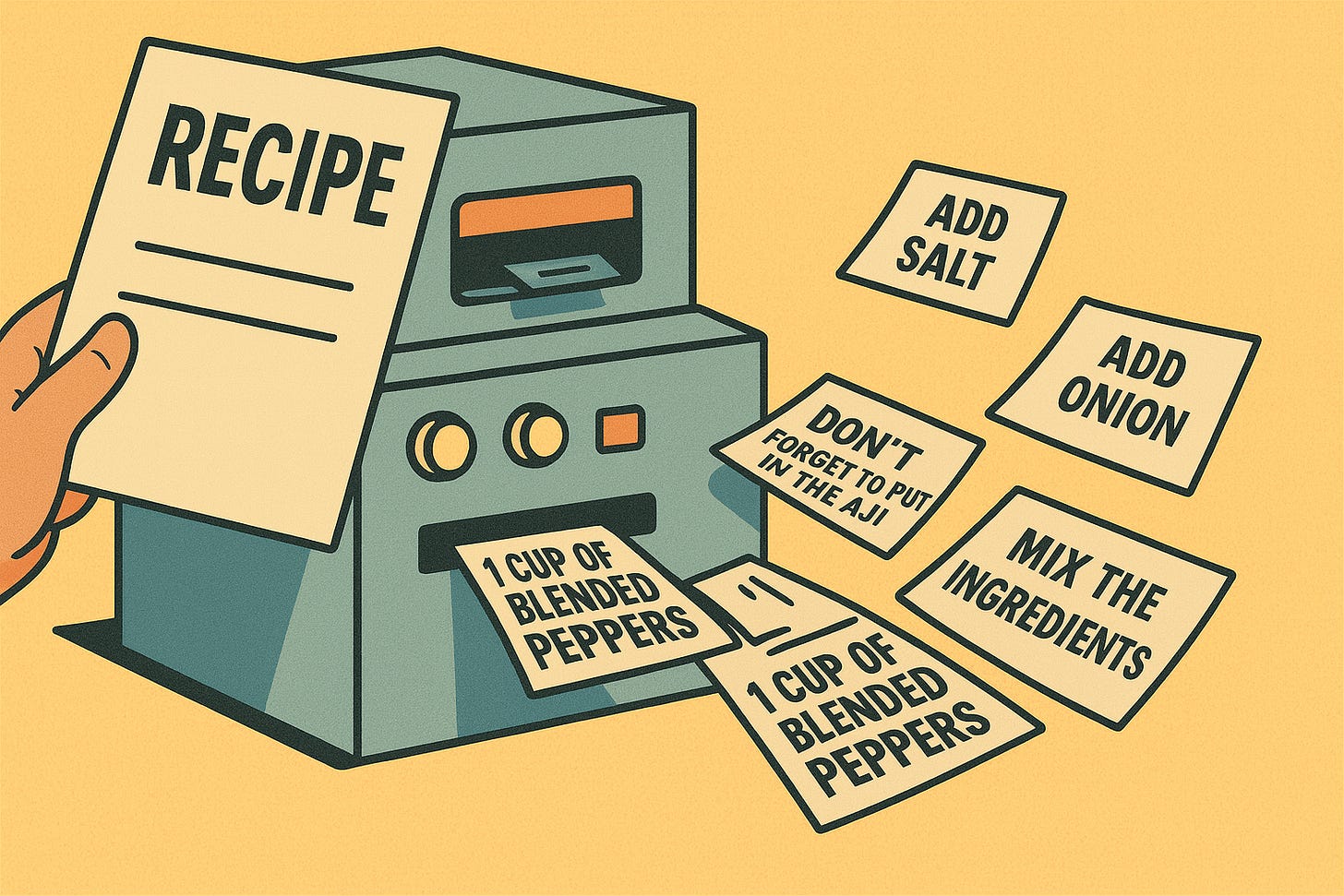

This machine won't read them all like a human would. What it's going to do is separate each recipe into a bunch of small pieces, like running everything through a paper shredder.

So each little piece will have a bit of information—one will have "add salt to taste," another says "don't forget the ají panca (a-ma-zing Peruvian red pepper paste, BTW)," and another just has "1 cup of blended peppers." And to make it even more fun, the machine gives each piece a color tag based on its meaning. The ones talking about similar ingredients will have similar colors, cooking times will have other colors.

Those little pieces with their tags are known as embeddings, and that's how our machine finds things later.

So when you have a question like "what else was in the roast?", the machine searches through all those pieces for the ones that most resemble what you asked. Sometimes it hits the mark and gives you exactly the secret. Other times, it ends up pulling instructions you probably shouldn't follow, like "add 4 liters of papaya juice..."

And if you have many recipes, and they're also very long (like the tamales), there are pieces that don't even fit in the machine's memory. So when you ask for help, it might end up filling the gaps with its best guess, or worse, mixing different recipes... It could end up fusing the lúcuma ice cream recipe with the lasagna, and trust me, you don't want lúcuma-flavored pasta or ice cream with meat chunks.

That's why, even if you upload the most complete recipe collection, the AI's answers will always depend on how it cuts, labels, and searches through the mess of pieces it has stored.

Following me so far? Now you know that next time an AI gives you a weird answer, you probably got a "fusion recipe."

The machine that "forgets" recipes

Our recipe machine has another problem: it forgets important parts.

This happens because, even though you've put the entire collection of grandma's recipes in the machine, it can only keep some pieces in its memory at the same time.

Let's say our machine has somewhat limited memory. The technical folks call this the "context window." Basically, it's the space the machine has to look at pieces simultaneously and try to put together a coherent response.

If you ask something about turkey stuffing, but that piece just couldn't fit in the machine, the AI will try to do what it can with what it has on hand. Maybe it'll respond by mixing steps from other recipes, or get creative and invent a new recipe. Like when you try to improvise in the kitchen (though I’m positive that you that you do it better than the AI).

Why does the machine end up making 'fusion' recipes?

My mamama has thousands of delicious recipes 😍. If I want to put them all in, as we already know, what the machine will do is cut them into pieces, and when it searches for answers, it might mix pieces from different recipes without caring that they come from completely different dishes.

Why does it do this? Because the machine searches by similarity of meaning, not by document logic. If you ask about "Pollo a la brasa" (Peruvian rotisserie chicken), it's going to search for all the pieces that talk about similar things, without caring if one comes from chicken soup, another from grilled octopus, and another from tamales. To the machine, they're all similar, according to its color tags.

So when you ask "how long do I need to bake this?", the machine searches for the piece that most resembles... but that piece could come from panetón (Christmas bread), turkey, or chocolate cake.

Not what you want when you have guests for dinner.

One strategy to avoid experimental fusion responses is to upload only the recipes you need for your question, or tell the machine exactly which recipe interests you ("Only talk to me about seco con frejoles, please").

Now that you understand how its search system works, you know why sometimes you might get a recipe worthy of a... culinary disaster contest.

How does the machine find the recipe you're asking for? (and why sometimes it doesn't)

When you ask the machine "how was the turkey stuffing?", it doesn't search for just one piece among all of grandma's recipes. Rather, it starts searching for various fragments that have something in common with your question.

Imagine you throw out your question and all the pieces with similar words ("stuffing," "bake," "turkey") respond "here I am!" The machine gathers those fragments, organizes them, and with what it has available, tries to put together the answer.

If you want better results, be super specific. Instead of asking "how do I cook the meat?", better ask "what's the exact baking time for stuffed turkey?" The more details you give it, the better it can search among the right pieces.

Where do those recipes that aren't grandma's come from?

There are days when the machine answers you with a "recipe" that nobody in the family recognizes. In fact, if your grandma saw it, she'd kindly remove you from the kitchen (and never let you back... ever).

Why do these culinary tragedies happen?

When the machine searches and doesn't find any piece that fits well with your question, instead of staying quiet... it fills the gaps using whatever it has available, or worse, makes something up on the spot.

It's like that cousin who wants to help and pulls out of thin air "the secret recipe for rice with strawberry mayonnaise." Nobody asked for it, nobody wants it.

This, in the artificial intelligence world, is called "hallucination." The machine invents new recipes, just because it hates admitting it doesn't have the answer.

Back to real life

If you made it this far, you probably already noticed that my grandma's recipe machine was just a metaphor to explain what really happens when you upload files to an AI like ChatGPT (or any other modern assistant).

When you upload a document, the AI doesn't "read" it from start to finish like a person would. What it does is cut it into many small pieces (this is called tokenization). Then it transforms each piece into a kind of special mathematical tag (embedding) to be able to search it faster later.

The problem is that AI has limited space: it can only process a limited amount of pieces at a time (the famous context window). If your document is large, many fragments are left out when you ask questions.

When you ask it something, the AI compares your question with the pieces it has available. It selects the most similar ones and tries to put together an answer.

The result?

Clear question + relevant piece = accurate answer

Various similar pieces from different contexts = "fusion recipe" answer

Doesn't find anything exact = invents an answer (hallucination)

That's why, even though the AI sounds very confident, it's not consulting your entire file like a human expert would: it's putting together the answer with the most similar pieces it could find, according to its own organization system.

In summary:

You're not giving it "infinite memory." Your file gets split and only part of it really comes into play.

The AI searches for similarity, not certainty. That's why sometimes it hits the mark, sometimes it mixes, and sometimes it invents.

Always check the source if the answer sounds weird, and be specific when asking to help it find the right piece.

And yes, no matter how much the models advance, sometimes grandma's touch is still unbeatable (and much more reliable).

I hope you had fun with this post. Maybe you learned something new, or at least I managed to make you hungry and craving Peruvian food :)

See you later, I'm going to lunch!

G

Note: You might not be familiar with much of the food I mention here... they're Peruvian dishes. If you've never tried them, I totally recommend you do—I'm sure you'll love them. Though they'll probably never be as delicious as the ones my mamama makes. ❤️